Using Waybackurls to find flaws

Written By Indrajeet Bhuyan

It is said that once you put something on the internet, it’s there forever. It’s valid to a large extent and services like web archives make it possible. In this tutorial, we will learn about a tool that takes advantage of old web details and helps us find flaws in our targets.

What is Way Back Machine

Way back machine is a part of the Internet Archive. It was first introduced in 2001, this tool lets you go back in time to see what websites looked like at a certain point in time. The Wayback machine features more than 690 billion web pages at the time of writing and it keeps adding more each year. The Wayback Machine downloads all publicly accessible information and data files on web pages through its crawl mechanism.

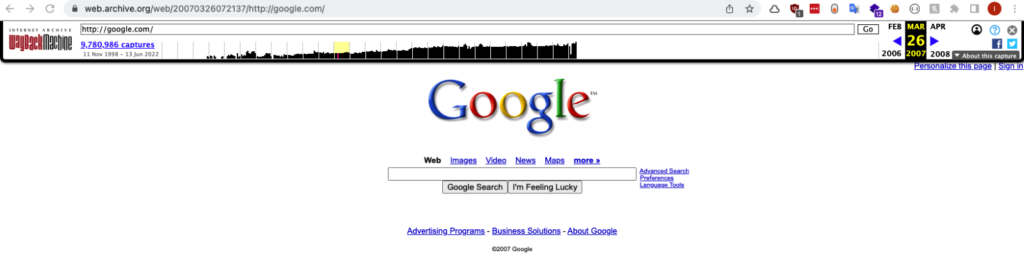

Here is how searching google looks like. As you can see, we can go back to 1999. We can click on the year and the date to see how google home page looked like.

Here is what Google’s homepage looked like on 26th March 2007. The frequency of snapshot captures varies greatly by the website. Typically, the larger (and perhaps more popular) a website, the more crawling occurs.

Now the question is why am I talking about this service? How can it help us find flaws in websites?

Here is the answer:

- Often web developers keep making changes to the website’s front end, not the back end. If we only see the current web pages, we might miss out a few of the interesting endpoints which the developer removed from the page but still exist in the back end.

- Sometimes when some flaws are found in websites, instead of fixing the flaw, developers just remove it from the website. The thinking behind this is that if this is removed from the site then it cannot be accessed as people will never know about it.

Using the Wayback machine we can get new endpoints and increase our attack surface. But doing this manually takes a lot of time so let me introduce you to a very useful tool called Waybackurls.

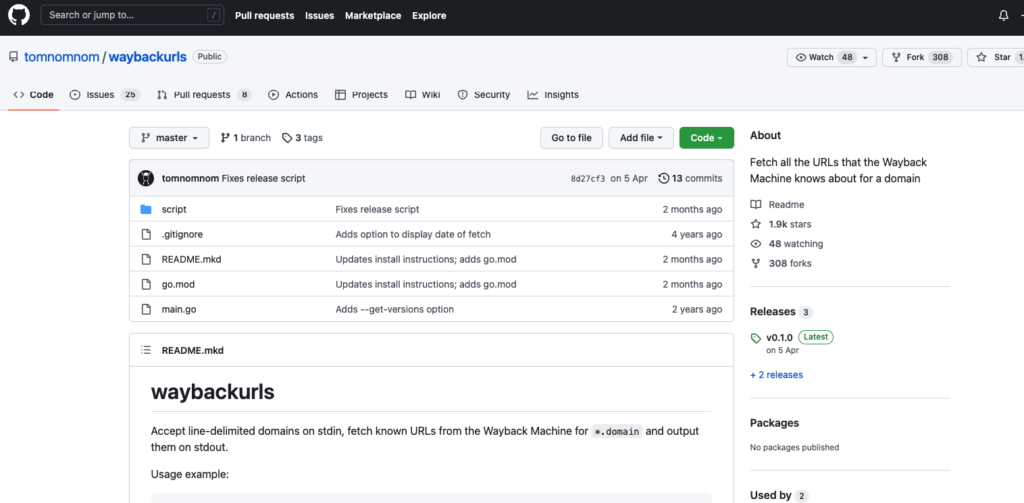

You can download the tool from here: https://github.com/tomnomnom/waybackurls

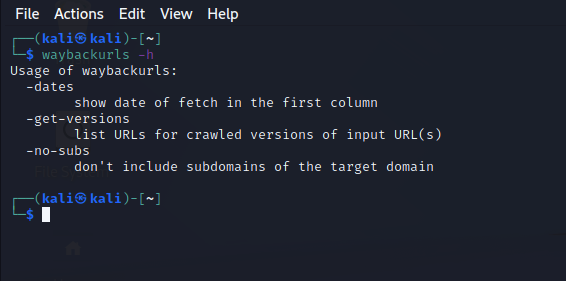

As the name suggests, this tool fetches all the URLs that the Wayback Machine knows about for a domain. Once we get all the URLs we can then check for a lot of flaws or fuzzing in the endpoints.

Installing the tool is very simple. You need to have GO installed and then run the following command :

go install github.com/tomnomnom/waybackurls@latest

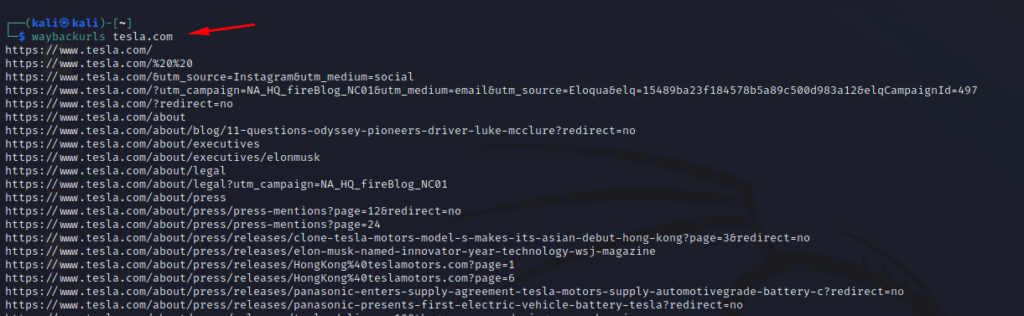

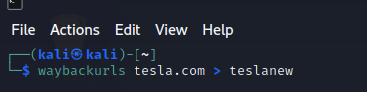

Here in this example, I’ll try to get the old urls of tesla.com

Once the command is run, we can see that the tool fetched 1000s of URLs for us from the way back machine.

We can say these URLs to a file by running the following command

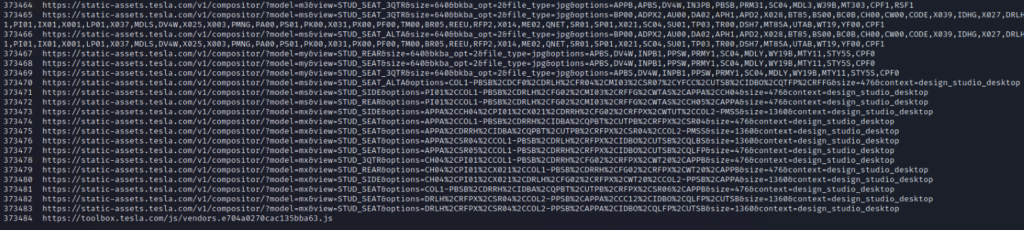

When opening the file, we can see that this tool found around 373484 urls from way back machine

Now we can use this information to find hidden endpoints, try out keywords like redirect and see if any open redirect flaws exist. We can do a lot more; it all depends on our creativity.

Here is a write-up by a bug-hunter called ‘Sicksec’ who used this tool to find a flaw that helped him get USD 1000.

Read his writeup here: https://infosecwriteups.com/how-i-scored-1k-bounty-using-waybackurls-717d9673ca52

Here is one more write-up where the author has taken it to the next level. Here the author first collected all the URLs using the way back URL tool and then he sent to another tool that looks for XSS. And by this method, he automated the whole process of finding XSS quickly.

You can read about it here: https://infosecwriteups.com/automating-xss-using-dalfox-gf-and-waybackurls-bc6de16a5c75

If you read more writeups on how people use this tool you will get a good idea of how to use this tool in direct scenarios.