When pentesting it is very important to know as much details possible about the target. The more we know about the target the better flaws we can find. Today in this article I would like to introduce you with a tool written in Go which can help you understand you target better.

What is crawling/spidering?

“Crawler” (sometimes also called a “robot” or “spider”) is a generic term for any program that is used to automatically discover and scan websites by following links from one webpage to another. Similarly a Web Crawler, spider, or search engine bot downloads and indexes content from all over the Internet. The goal of such a bot is to learn what (almost) every webpage on the web is about, so that the information can be retrieved when it’s needed.

Crawling is very useful in pentesting as it can help in understanding how a website looks like, where to focus more , its functionalities etc. There are different crawlers available for pentesting . Burp Suite too have its own crawler which helps in crawling the website. What makes GoSpider special is its speed at which it works and delivers results in a very short time unlike many other similar tools.

Here are some of the features of GoSpider:

- Fast web crawling

- Brute force and parse sitemap.xml

- Parse robots.txt

- Generate and verify link from JavaScript files

- Link Finder

Installation

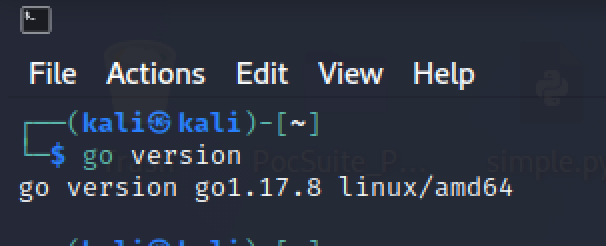

As GoSpider is a Golang language-based tool, so you need to have a Golang environment on your system. If Go is not installed in you system you can check this quick tutorial: https://go.dev/doc/install.

Once Go is installed, type the following command to verify if Go is now installed.

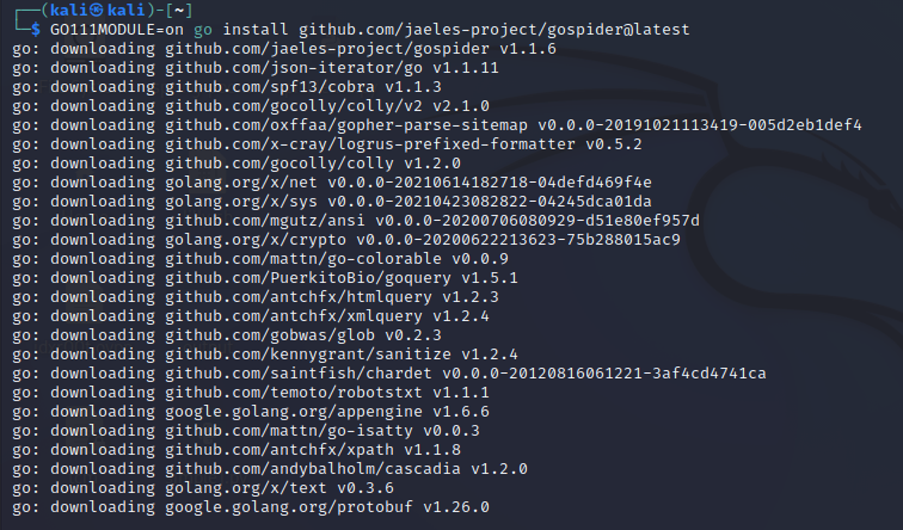

Now go to the github repository of Gospider and clone it by running this command:

GO111MODULE=on go install github.com/jaeles-project/gospider@latest

Now Copy the path of the tool in /usr/bin directory for accessing the tool from anywhere, use the following command

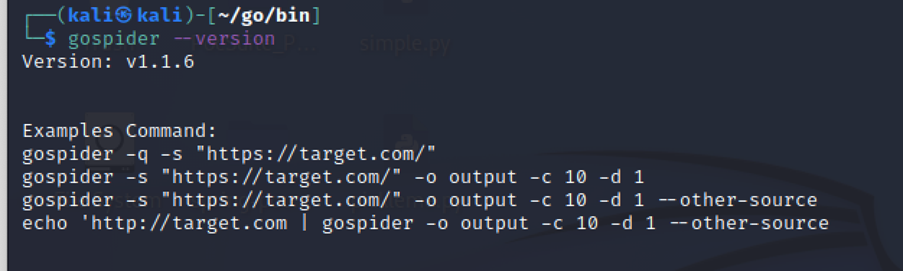

Run the following command to check the version of GoSpider:

gospider –verison

Currently, we have v1.1.6 which is the latest version of GoSpider. Now we can start to spider / crawl websites to get information which comes in handy while doing a pen-testing.

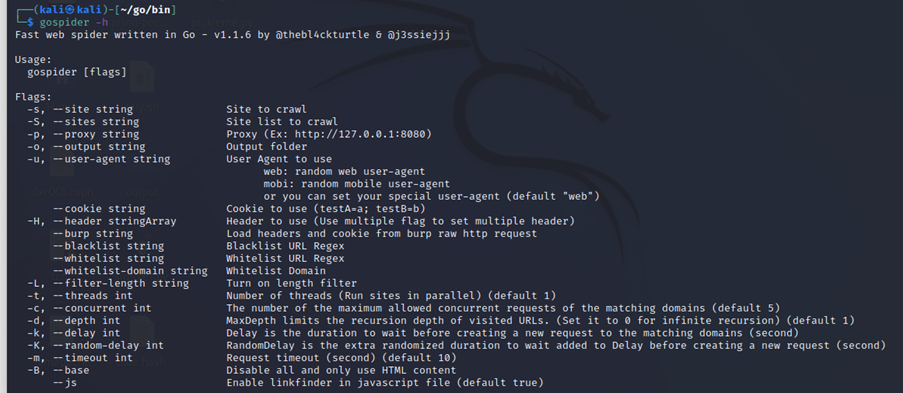

Run the following command to see the help page to know what are the different features which we can use in GoSpider.

The tool provides us with different flags which can be used while crawling a website.

Running the GoSpider tool

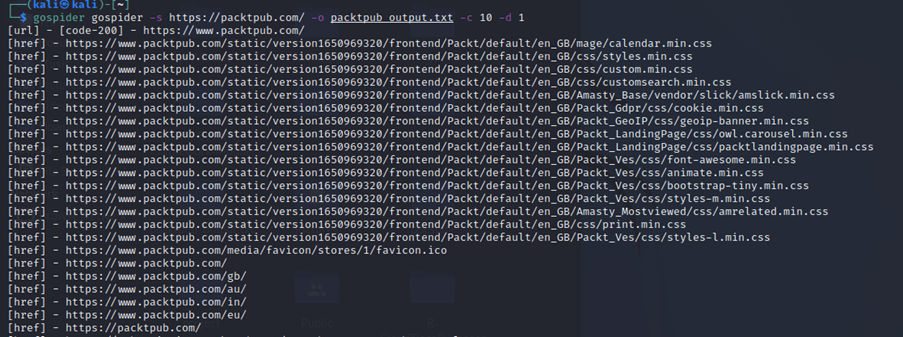

- First we will crawl a single website and save the result in an output file

For this example we will be using the Packt’s official website

Command :

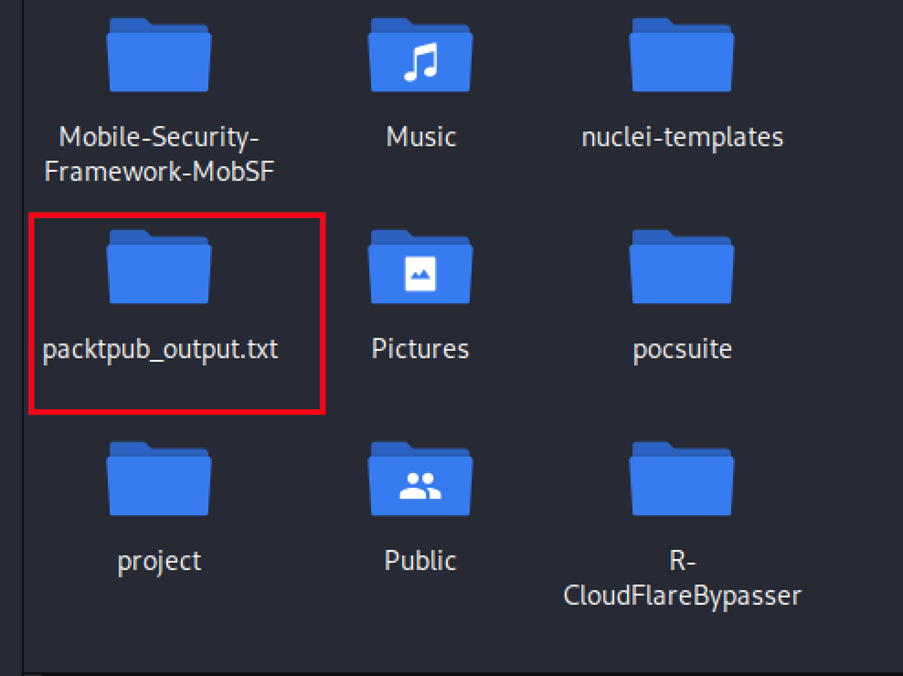

gospider -s “https://www.packtpub.com/ -o packtpub_output.txt -c 10 -d 1

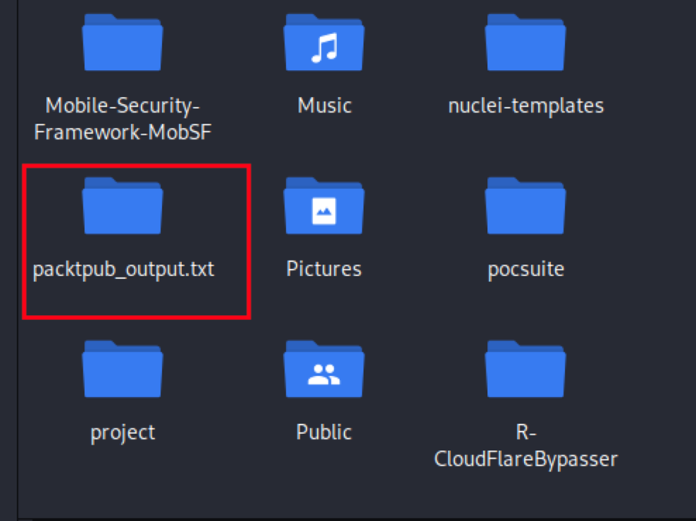

This command creates a folder with a txt file containing the data.

- Run with list of sites

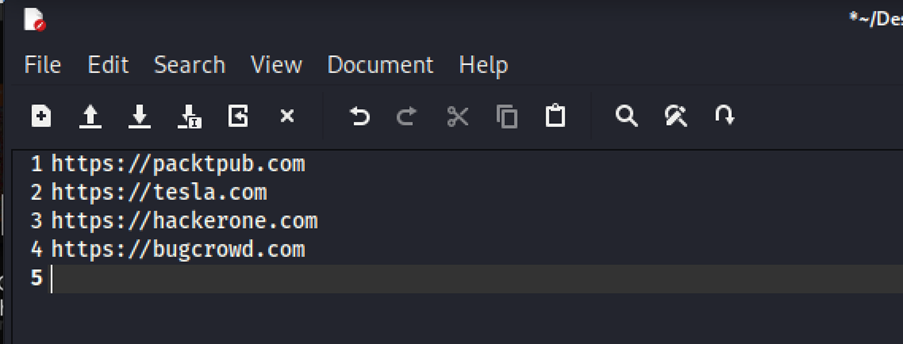

Here we can specify a number of websites which goSpider will crawl and save.

Command : gospider -s list.txt -o output -c 10 -d 1

For this example I made a list of 4 websites

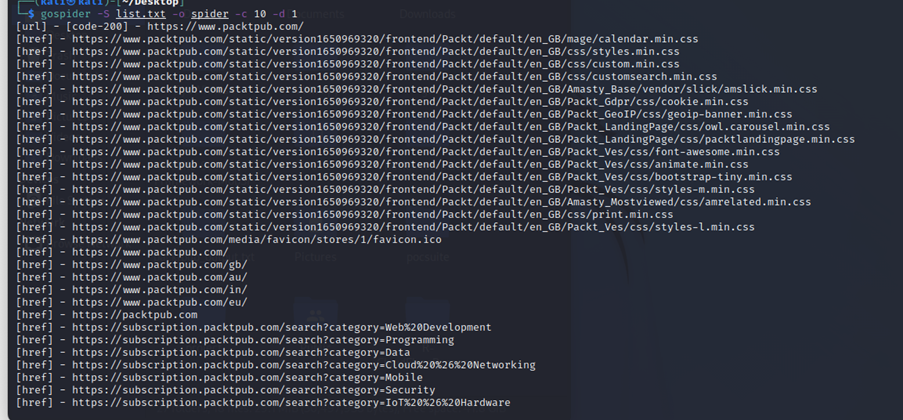

Now run the following command to crawl multiple sites :

Command : gospider -S list.txt -o spider -c 10 -d 1

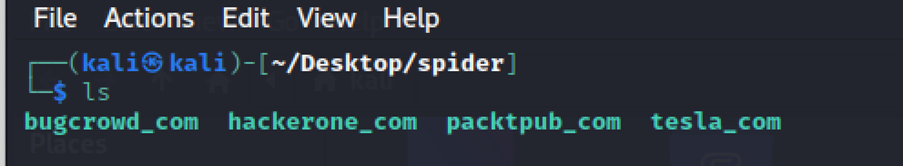

The tool automatically creates different files for each website which we can later analyse to better understand the website and its functionalities.

- Get URLs from 3rd party

What makes this tool more powerful is its 3rd party integration. Previously I wrote an article on how wayback archive can be used in pentesting and also showed a tool to do it. You can read about it here : https://security.packt.com/using-waybackurls-to-find-flaws/

This wayback archive can be integrated with GoSpider which can make the crawl results more rich.

Command : gospider -s https://google.com/ -o thirdparty -c 10 -d 1 –other-source –include-subs

This command will also include sub domains.

Is it worth using GoSpider?

Gospider is a powerful and fast crawling tool. There are more features in the tool which one can explore and customize the tool according to the need. Having knowledge about the website you are about to pentest is very important and Gospider can help you a lot in this process.